As I treaded through the dynamic technology-driven world on my journey, I always developed an inherent likeness for the underlying phenomenon of Artificial Intelligence (AI). Through this article, I intent to share my insights on AI, differentiate it amongst its popular subfields – Machine Learning (ML) and Deep Learning (DL), and elucidate why AI is not just a technological marvel but a significant business asset. Lastly, I will touch on typical AI fears and advocate for a more enlightened and less apprehensive approach.

Defining AI

My Journey with AI

Understanding the basis of AI has taken me on a journey to learning what AI really means. AI, at the core of it, is the science of creating machines that can do things that would otherwise require human intelligence if done by humans. It includes capabilities such as reasoning, learning, problem-solving, perception, and language understanding.

When I attended the Executive Programme “Managing Artificial Intelligence” at LUISS Business School, I learned an interesting definition of Artificial Intelligence, namely the one provided by the Oxford Dictionary: “Software used to perform tasks or produce output previously thought to require human intelligence, esp. by using machine learning to extrapolate from large collections of data”.

Given that John McCarthy was the first to coin the term ‘Artificial Intelligence’, I believe it’s fair to recall his own definition of AI: “It is the science and engineering of making intelligent machines, especially intelligent computer programs. It is related to the similar task of using computers to understand human intelligence, but AI does not have to confine itself to methods that are biologically observable.”

AI in Context

AI has its deep roots, tracing way back to the tasks done by mythical characters in ancient civilizations. The modern concept of AI, however, took root in the 20th century when pioneers like Alan Turing (London 1912 – Cheshire 1954) set premise by proposing the idea of machines that could be able to simulate human intelligence.

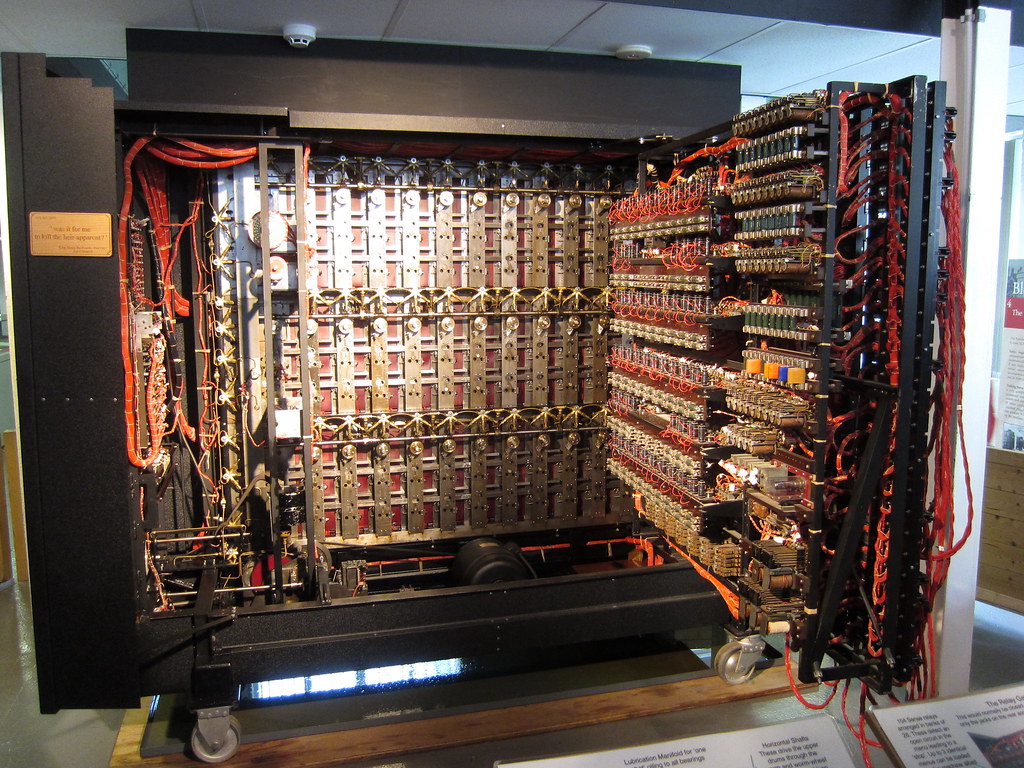

Turing’s most notable contribution is the concept of the Turing Machine, a hypothetical device that manipulates symbols on a strip of tape according to a set of rules. This concept, foundational in computer science, illustrated the principles of algorithmic computation and laid the groundwork for modern computers. Another significant contribution is the Turing Test, a criterion of intelligence in a computer, requiring that a human should be unable to distinguish the machine from another human being based solely on the conversation. This concept continues to influence AI development and philosophical discussions about machine intelligence.

During World War II, Turing played a pivotal role in breaking German ciphers, significantly contributing to the Allied victory. His work in cryptanalysis demonstrated practical applications of theoretical concepts, blending mathematics, logic, and computation.

Turing’s theories remain central in AI and computing. The Turing Award, often regarded as the “Nobel Prize of Computing,” honors individuals for significant contributions to the field, reflecting Turing’s enduring legacy. His ideas continue to inspire and guide contemporary AI research, underlining his status as a founding father of the field. Alan Turing’s visionary work in the early 20th century laid the cornerstones for what would become AI. His legacy persists, not just in the technologies we use today but in the very way we conceptualize and approach computational problems. Turing’s life and work remain a testament to the profound impact one individual can have on the future of technology.

“The imitation game” (2014) is a nice movie about the Turing’s machine based on the 1983 biography “Alan Turing: The Enigma” by Andrew Hodges. The film represents well the spirit in which Turing approached his personal challenge.

John McCarthy (Boston 1927 – Stanford 2011), often recognized as the father of AI, coined the term “Artificial Intelligence” in 1955. Unlike Turing, who laid the theoretical groundwork, McCarthy was instrumental in establishing AI as a distinct academic discipline. While Turing conceptualized machine intelligence and computational principles, McCarthy focused on creating programming languages (like Lisp) and environments conducive to AI research. This practical approach complemented Turing’s theoretical models, moving AI from abstract concepts to tangible experimentation.

Marvin Minsky (New York 1927 – Boston 2016), a contemporary of McCarthy, significantly influenced the field of AI through his work on neural networks and cognitive models. Turing envisioned machines that could mimic general human intelligence. In contrast, Minsky concentrated on specific aspects of intelligence, such as learning and perception, developing the concept of “frames” as data structures for representing stereotyped situations. This approach marked a departure from Turing’s broader perspective, focusing on specialized AI systems.

Dipping into the Subfields of AI

Machine Learning: Beyond Algorithms

What I’ve learnt is that Machine Learning is a subset of AI. It involves nowadays focusing on developing algorithms that would enhance machines learning and making decisions based on data. While in a traditional programming stance we would expect us to give very precise instructions, in an ML algorithm the decisions are made through learning from data about the patterns mostly and with minimum human intervention.

Deep Learning: Subset of a Subset

Deep Learning have aroused my interest in its ability to process and interpret large sets of data. Neurons networks were used to help understand a lot of unstructured information in DL. It is really suitable for complex tasks such as image or speech recognition.

AI in the Business Sphere

AI as a Business Ally

I saw businesses undergoing an AI transformation during my professional experiences. In the optimization of business operations, for improvement of customer experiences, and for opportunities to innovate in ways never before seen. AI-powered analytics allow predicting market trends, automating chatbots for customer service, and managing the supply chain efficiently.

Tangible Business Wins

That there is so much potential for AI to transform the business practices from small startups to the large multinational companies is proof enough of its successful incorporation. It brings in cost savings and efficiency at the same time giving companies a competitive advantage.

Demystifying Fear Factor in AI

The truth of the matter is that despite all the benefits that AI comes with, it’s been met with doubt and fear. Widespread fears regarding job displacement, invasion of privacy and out-of-control AI are there. Nevertheless, my understanding of the same places AI as nothing more than a measure for augmenting human beings rather than replacing them. Ethical values applicable in the development and functioning of AI include transparency, accountability, as well as protection of privacy.

CONCLUSION

My journey of understanding and applying AI has been (and will be) an enlightening one. AI, ML, and DL each with its unique capabilities are not just technological tools but catalyst towards business innovative growth. The informed enthusiasm, rather fear and denial towards AI will open the doors to a future where technology and humanity will co-exist and thrive together.